VisionCam: A Comprehensive XAI Toolkit for Interpreting Image-Based Deep Learning Models

Main Article Content

Abstract

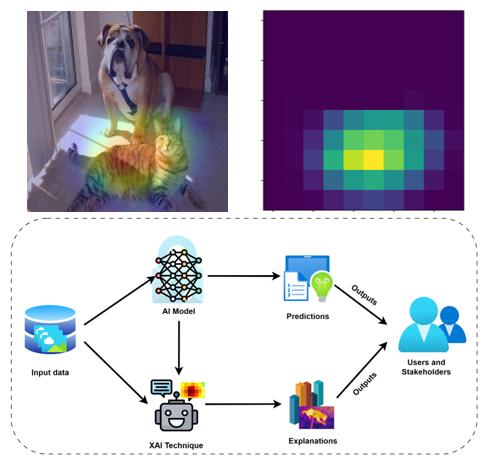

Artificial intelligence (AI), a rapidly developing technology, has revolutionized various aspects of our lives. However many AI models' complex inner workings are still unknown, frequently compared to a "black box." Particularly in crucial fields, this lack of explainability (XAI) reduces responsible AI research and reduces public confidence, and is accompanied by a growing demand for transparency and interpretability in AI decision-making. In response, this paper introduces a Python Extensible Toolkit for Explainable AI (XAI), This toolkit comprises nine state-of-the-art techniques for explaining AI models (especially deep learning models) decisions in image processing: GradCAM, GradCAM++, GradCAMElementWise, HriesCAM, RespondCAM, ScoreCAM, SmoothGradCAM++, XgradCAM, and AblationCAM. Each tool offers unique insights into model decision-making processes of deep learning models that work with image data, addressing various aspects of interpretability. Through case studies, we demonstrate the toolkit's impact on improving transparency and interpretability in AI systems that analyze visual information. The source code for the VisionCam toolkit is accessible at https://github.com/VisionCAM.

Downloads

Article Details

This work is licensed under a Creative Commons Attribution 4.0 International License.